A paper recently caught my eye, and I think it is a great excuse to talk about data scale and dimensionality.

Vatanen, T. et al. The human gut microbiome in early-onset type 1 diabetes from the TEDDY study. Nature 562, 589–594 (2018). (link)

In addition to having a great acronym for study of child development, they also sequenced 10,913 metagenomes from 783 children.

This is a ton of data.

If you haven’t worked with a “metagenome,” it’s usually about 10-20 million short words, each corresponding to 100-300 bases of a microbial genome. It’s a text file with some combination of ATCG written out over tens of millions of lines, with each line being a few hundred letters long. A single metagenome is big. It won’t open in Word. Now imagine you have 10,000 of them. Now imagine you have to make sense out of 10,000 of them.

Now, I’m being a bit extreme – there are some ways to deal with the data. However, I would argue that it’s this problem, how to deal with the data, that we could use some help with.

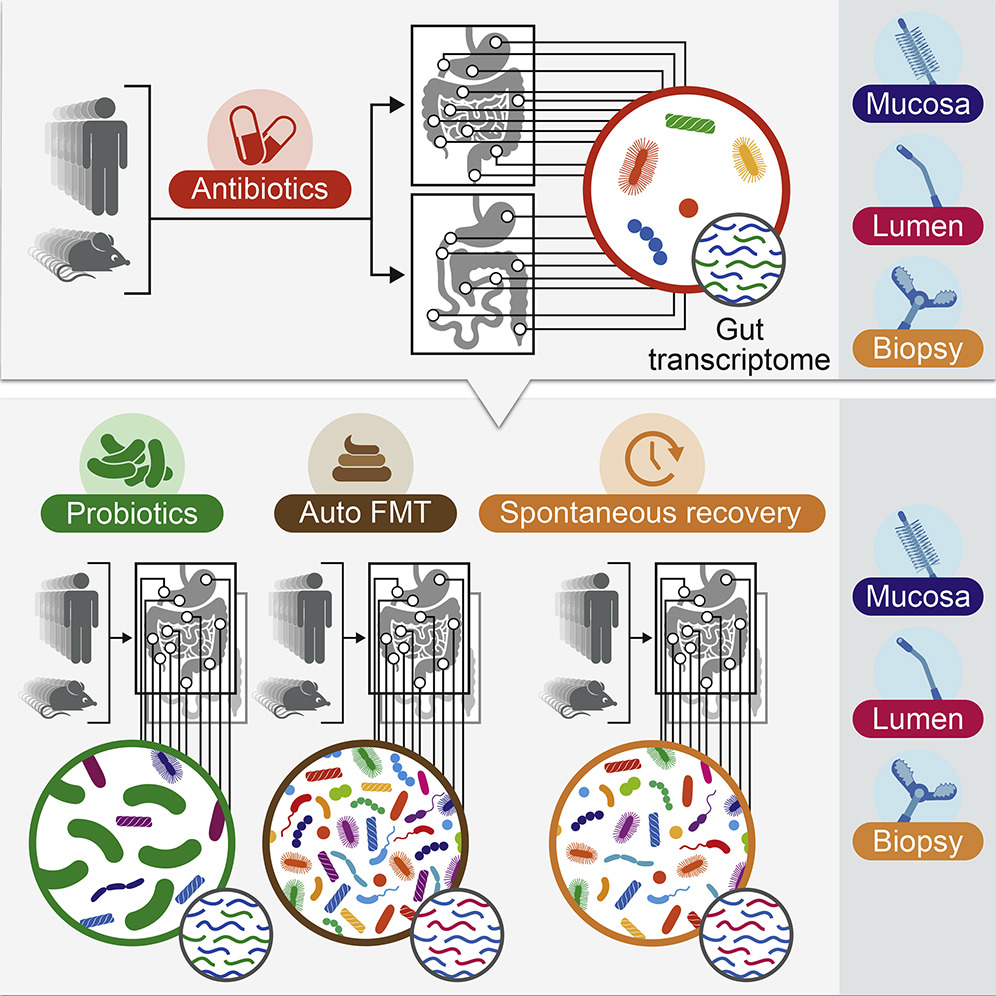

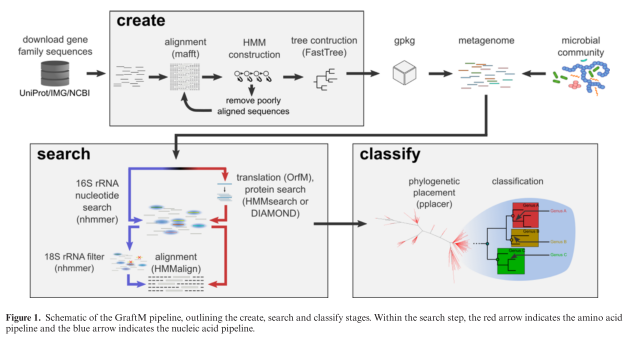

Taxonomic classification

The most effective way to deal with the data is to take each metagenome and figure out which organisms are present. This process is called “taxonomic classification” and it’s something that people have gotten pretty good at recently. You take all of those short ATCG words, you match them against all of the genomes you know about, and you use that information to make some educated about what collection of organisms are present. This is a biologically meaningful reduction in the data that results in hundreds or thousands of observations per sample. You can also validate these methods by processing “mock communities” and seeing if you get the right answer. I’m a fan.

With taxonomic classification you end up with thousands of observations (in this case organisms) across X samples. In the TEDDY study they had >10,000 samples, and so this dataset has a lot of statistical power (where you generally want more samples than observations).

Metabolic reconstruction

The other main way that people analyze metagenomes these days is by quantifying the abundance of each biochemical pathway present in the sample. I won’t talk about this here because my opinions are controversial and it’s best left for another post.

Gene-level analysis

I spend most of my time these days on “gene-level analysis.” This type of analysis tries to quantify every gene present in every genome in every sample. The motivation here is that sometimes genes move horizontally between species, and sometimes different strains within the same species will have different collections of genes. So, if you want to find something that you can’t find with taxonomic analysis, maybe gene-level analysis will pick it up. However, that’s an entirely different can of worms. Let’s open it up.

Every microbial genome contains roughly 1,000 genes. Every metagenome contains a few hundred genomes. So every metagenome contains hundreds of thousands of genes. When you look across a few hundred samples you might find a few million unique genes. When you look across 10,000 samples I can only guess that you’d find tens of millions of unique genes.

Now the dimensionality of the data is all lopsided. We have tens of millions of genes, which are observed across tens of thousands of samples. A biostatistician would tell us that this is seriously underpowered for making sense of the biology. Basically, this is an approach that just doesn’t work for studies with 10,000 samples, which I find to be pretty daunting.

Dealing with scale

The way that we find success in science is that we take information that a human cannot comprehend, and we transform it into something that a human can comprehend. We cannot look at a text file with ten million lines and understand anything about that sample, but we can transform it into a list of organisms with names that we can Google. I’m spending a lot of my time trying to do the same thing with gene-level metagenomic analysis, trying to transform it into something that a human can comprehend. This all falls into the category of “dimensionality reduction,” trying to reduce the number of observations per sample, while still retaining the biological information we care about. I’ll tell you that this problem is really hard and I’m not sure I have the single best true angle on it. I would absolutely love to have more eyes on the problem.

It increasingly seems like the world is driven by people who try to make sense of large amounts of data, and I would humbly ask for anyone who cares about this to try to think about metagenomic analysis. The data is massive, and we have a hard time figuring out how to make sense of it. We have a lot of good starts to this, and there are a lot of good people working in this area (too many to list), but I think we could always use more help.

The authors of the paper who analyzed 10,000 metagenomes learned a lot about how the microbiome develops during early childhood, but I’m sure that there is even more we can learn from this data. And I am also sure that we are getting close to world where we have 10X the data per sample, and experiments with 10X the samples. That is a world that I think we are ill-prepared for, and I’m excited to try to build the tools that we will need for it.